LG Electronics Silicon Valley Laboraroy

Project: NVZN

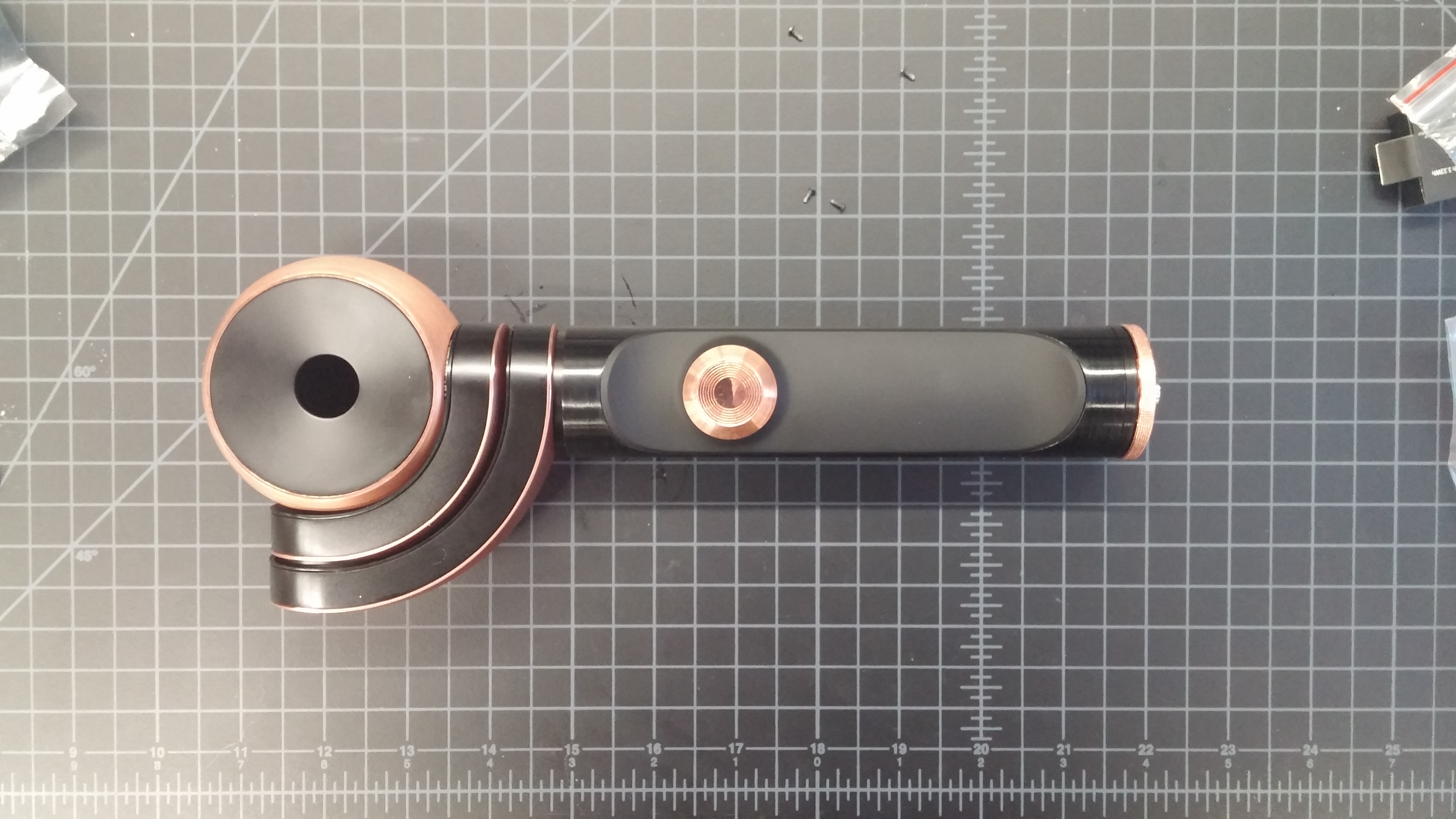

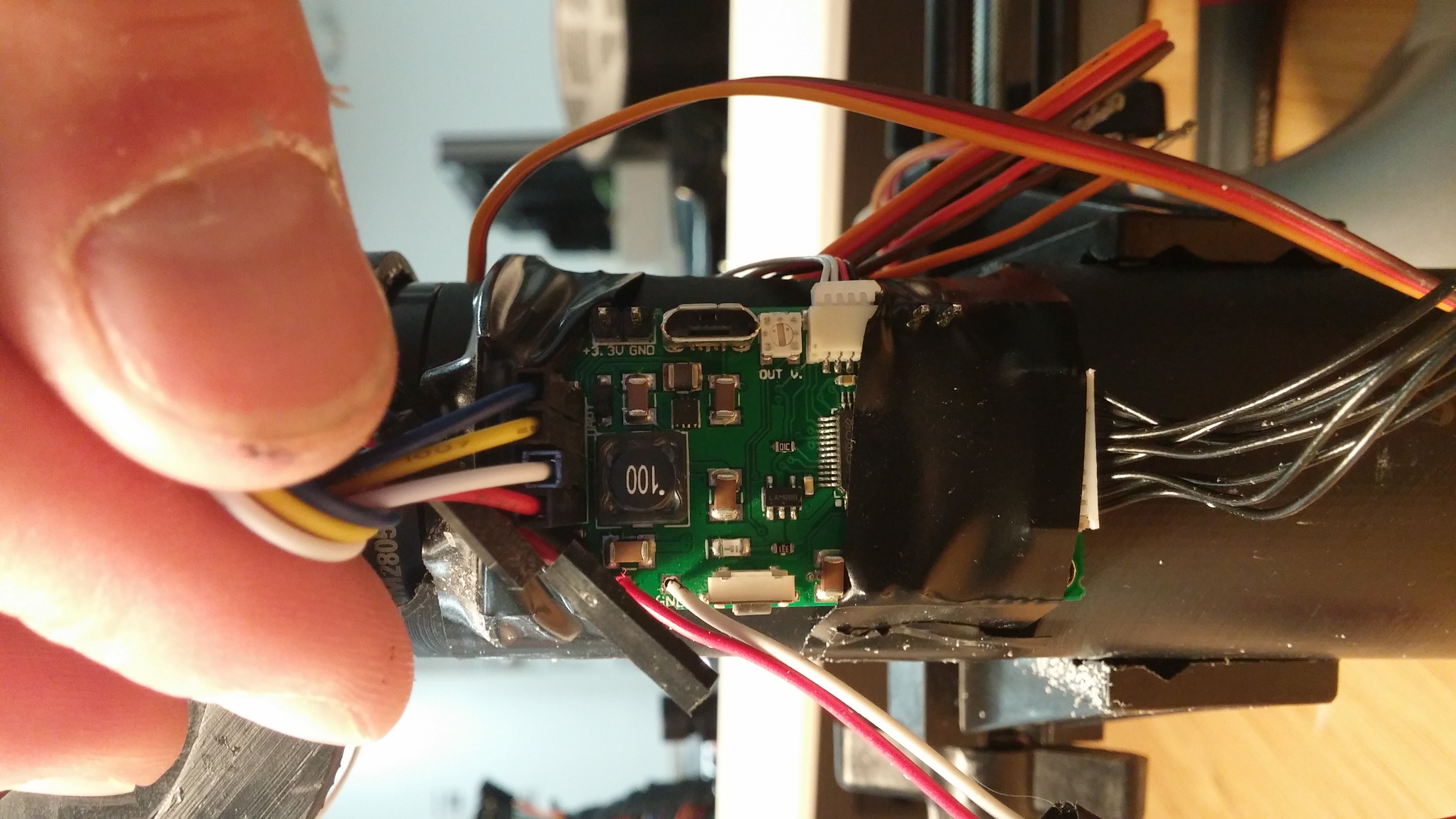

The name on the patent for the device we invented is "3-Axis Offset Concentric Spherical Gimbal System and Controller."

NVZN Presentation Final (Video) Read more...

Android to BLE Wireless Demo (Video) Read more...

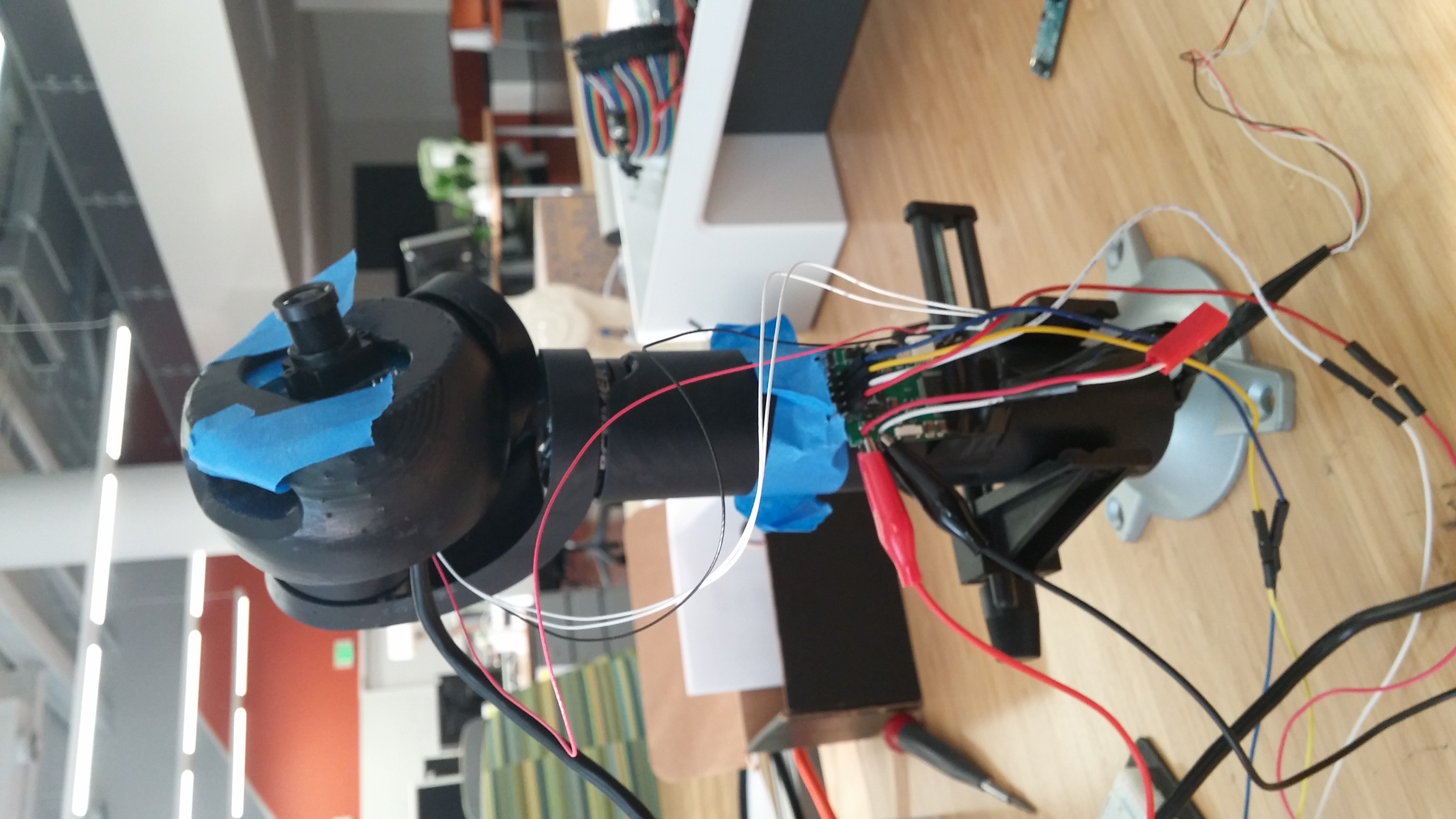

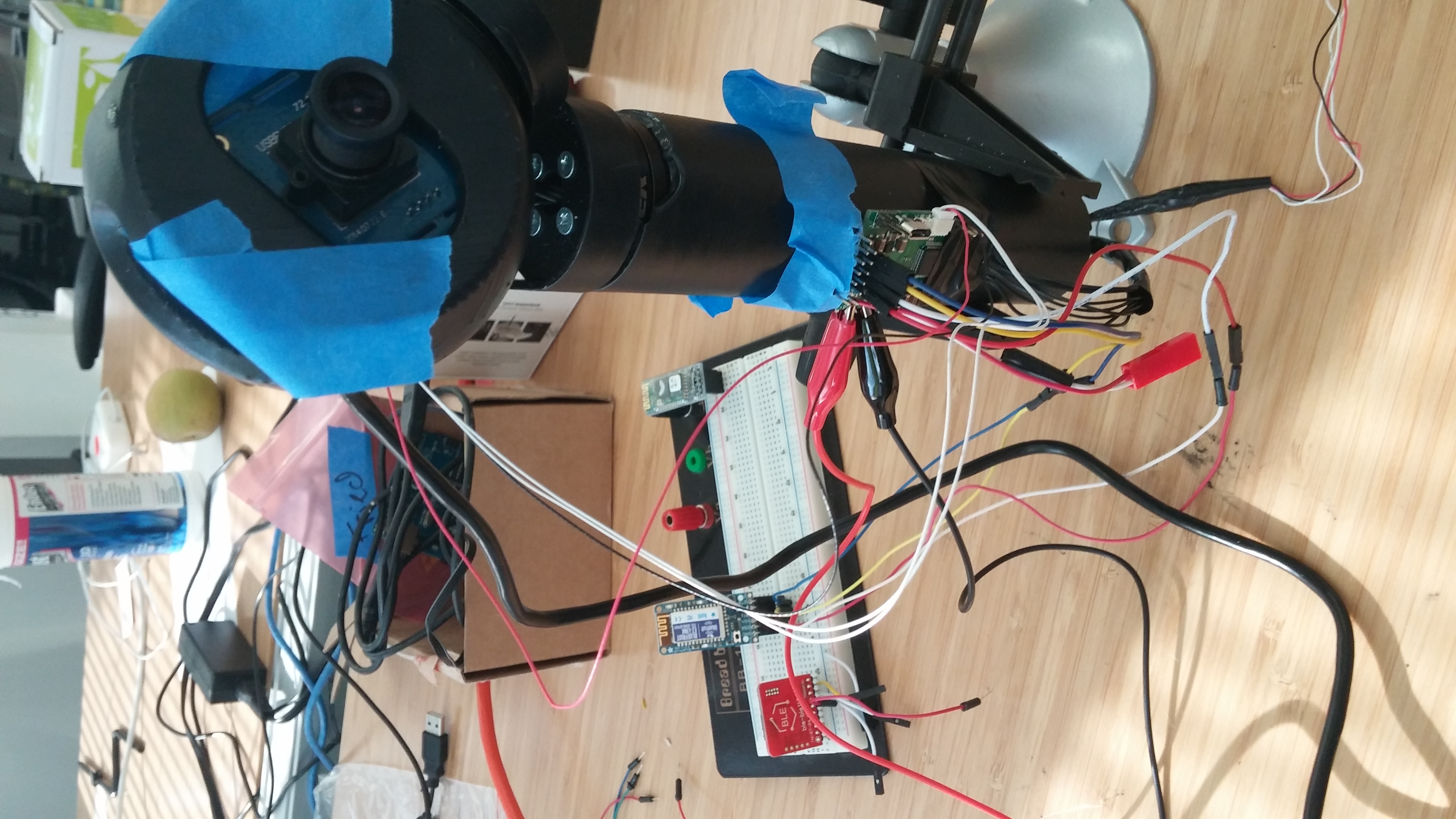

NVZN with video camera Read more...

NVZN with video camera Read more...

NVZN Appearance Model Read more...

NVZN Appearance Model Read more...

NVZN Appearance Model (video) Read more...

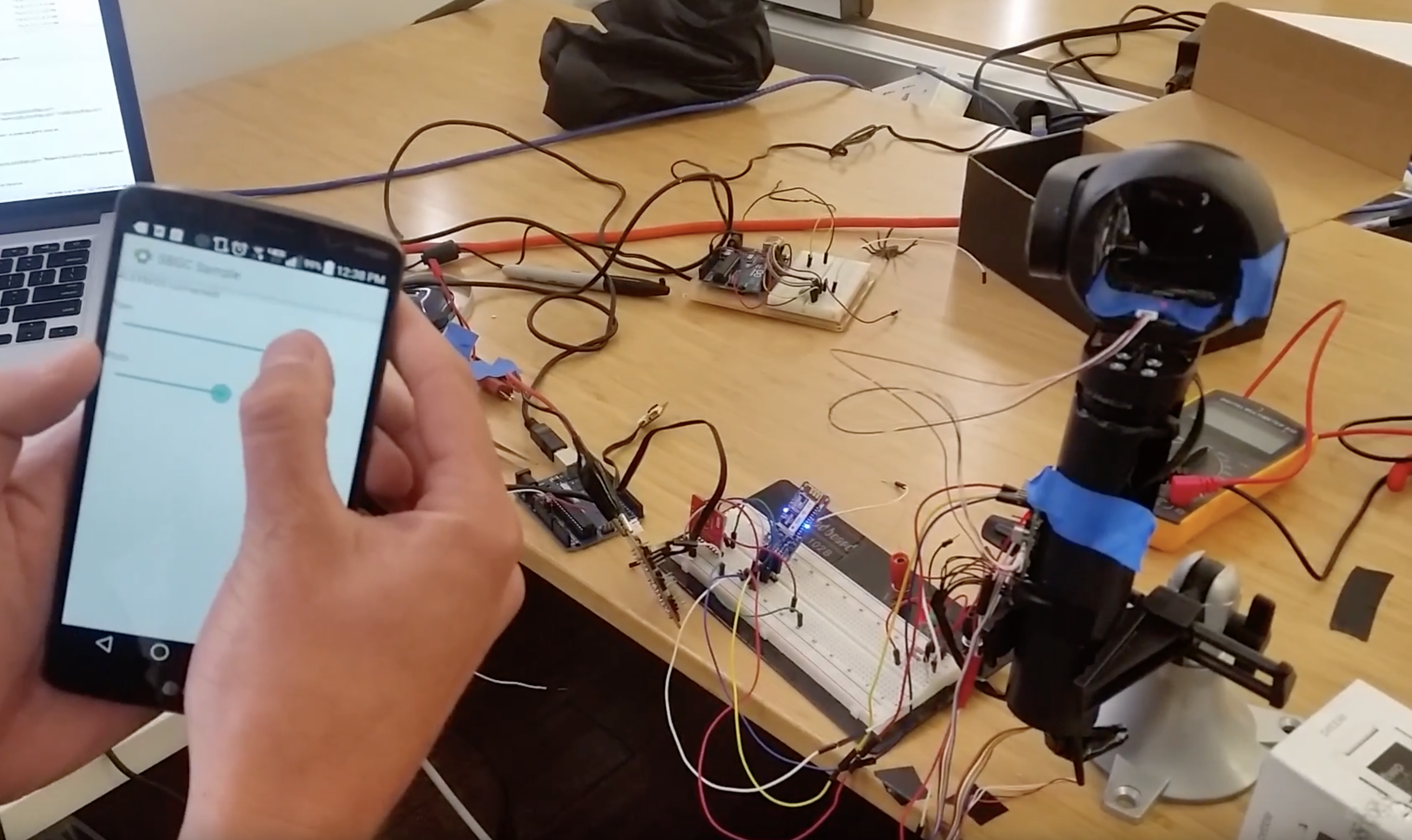

This is the demonstration video we presented at various places within LG. With the funtional prototype that I built. The most notable presentation was to LG's R&D lab in Korea. When we finished we got a standing ovation. :)

Click to See Video (audio recommended)

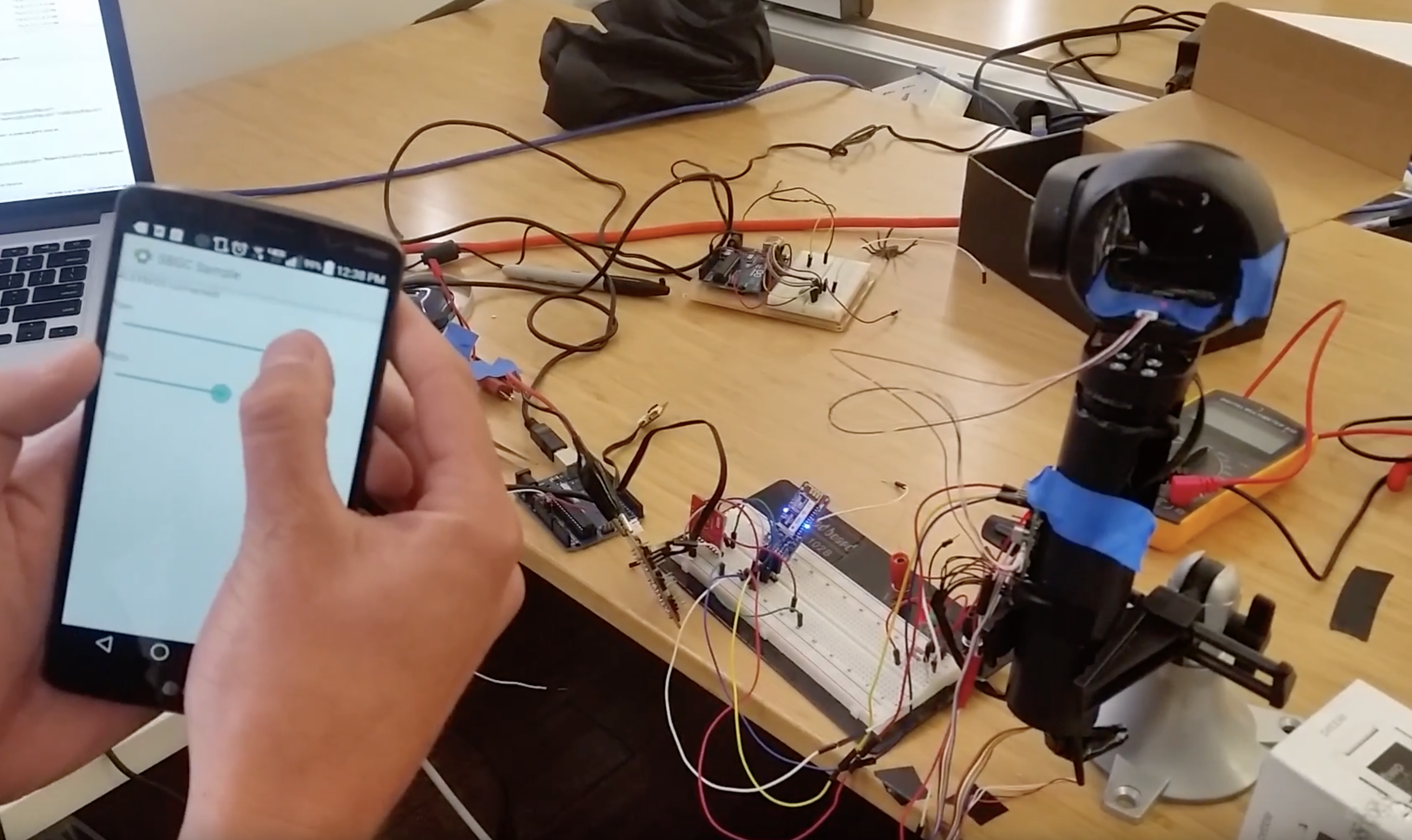

In this video I am demonstrating remote bluetooth control of the the 3 brushless gimbal motors from an Android app I built with simple UI sliders. Eventually I built this UI to lay on top of a real time preview of what the camera was seeing. It was built using an intel edison SBC (single board computer) and I wrote a node app that was listening over HTTP to ffmpeg the connected webcam was producing. Then the node app broadcasted the video over websockets to any connected browsers.

Click to See VideoProject: Google Glass Home Control

What I came to learn is that each form device factor (desktop, phone, smart watch, google glass) has its own unique properties that should be leveraged to yield the best possible usability and effectiveness of the product. In the case of google glass (it was not useful for very much because it did not do anything better than any other device) but it have one unique property that other devices did not: It knew where you were looking! So I utilized that to build prototype to demonstrate how you could control any LG wifi enabled devices that were on the same wifi network just by looking at them. The glass app I built would scan for connected devices over WIFI and when you looked at them a menu would pop up with contextual commands for that particular device.

I mocked up on the TV two large boxes to test line of sight accuracy as I moved my head with the google glass. I had the glass application and a TV web application talking to a websocket server that I was hosting on one of my computers to facilitate the communication.

Detail of working on Glass, IoT, SmartPhone, TV and LG's prototype SmartWatch runing webOS to experiment with bringing together different form factors and technologies to investigate completely new user experiences or business oportunities.

Project: Mock Home Screen Experience Prototype

Throughout this project to test the usability of our OS's UI, I built about 80% of our home screen, navigation, app switching, settings and first use experinces in a mock webapp. This prototype was used for just about everything.

Our head of UX came back from Korea, where he demoed our concept to the heads of the home entertainment division, and said the prototype I built played a big part in getting sign off.

We also utalized it for user testing for various metrics.

It was also used as a tool to communicate design more accuratly to the platform engineers.

We also experimented with a sound designer and integrated various audio explorations into the UI. It was pretty fun. We had it play perfect mellodies when you hovered over the apps in the launcher.

Feasibility

Accessibility

More Project Content

I have far more content in a google photos album.